Whither hobby computing?

David Stonier-Gibson

The world is changing rapidly, not least of all in the realm of computing. Computing today is vastly different to what it was when Melb PC was established 37 years ago, with terms like The Cloud, The Edge, AI, Machine Learning and Quantum Computing, not to mention tablets, smartphones and home assistants. Far from being mysterious machines in air conditioned fortresses, computers are now embedded in almost every gadget, appliance or tool we use every day.

When I started my engineering studies in Perth in 1967, we had a first semester subject “Slide rule 101”. In second semester we got “Computer programming 102”, and I started to discover the joys of Fortran IV, punched cards, fan-fold paper, a 5 day turnaround cycle resulting in “102 syntax errors detected – 10K core used”, all using a room sized computer nestled behind an uninviting glass wall and tended by stern faced acolytes in white dust coats.

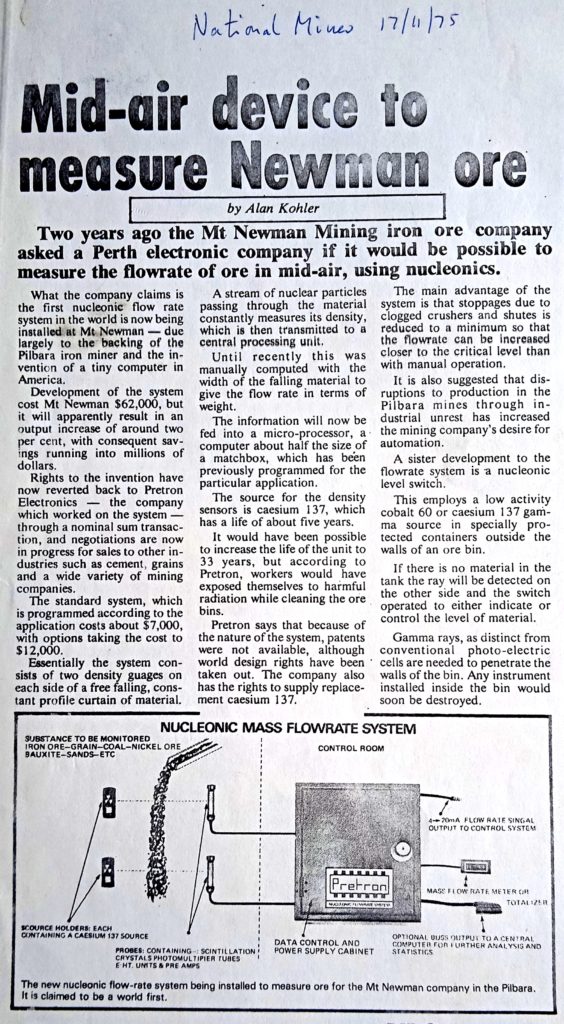

By the mid-70s I had developed a sophisticated industrial instrument, using a microprocessor (16 bit, clocked at 2MHz) and programmed with a floating package I wrote myself, for Mount Newman Mining. I still have a clipping of a write-up of it by a young Alan Kohler, describing the wonder of “a computer half the size of a matchbox”.

That was before the days of word processors and spreadsheets. Around 1978 I was working, in my spare time, on a primitive word processor program, and I independently conceived the idea of a spreadsheet. I lacked the resources (initiative?) to follow through on the spreadsheet, and hence missed out on becoming a billionaire! Maybe?

Also in 1978 I bought myself a TRS80, an early consumer grade personal computer, and hence had one on one access to a computer at home as well as at work. The following year Apple got VisiCalc, the first spread sheet program, and sales of their personal computers rocketed on Wall Street.

Those were heady days for me, with Byte magazine, programming in BASIC as well as Z80 and 8085 assembler, and designing embedded microprocessor applications. I even got the opportunity to design my very own masked programmed chip for radio signalling. That had to be right first time, as the minimum order quantity was 5,000 chips!

The day the world changed

On August 12, 1981 IBM announced their PC, and small desktop personal computers became respectable; nobody was ever fired for buying IBM! Three years later our club was started by a group of tech geeks who came together around their shared passion for the IBM PC. They were so – dare I say emotionally – beholden to the IBM PC that their logo echoed the IBM logo. It was indeed a club for its time.

Much has changed since then. The trajectory of the desktop personal computer has been, in many ways, linear and evolutionary, rather than revolutionary. While the power and capacities of the desktop have increased exponentially: Speed 20,000 fold, memory 250,000 fold, storage 1,000,000 fold, a desktop computer today would be recognisable if you could take it back to 1984.

What a 1984 Melb PC member would not be able to wrap her head around is the Internet, smartphones, smart TVs, tablet computers, home assistants, handfuls of computer chips embedded in every car and in virtually every home appliance, and wrist watch telephones. Dick Tracy was pure fiction back then. Likewise with so many of the things we now take for granted and which present day tech geeks love to play with: Home automation, 3D printers, microcomputer controlled (CNC) machining, digital photography. Not to mention the electronics hobby transforming into something centred on $10 boards with more power than an original IBM PC.

The future, 1930s style

So where is it all headed?

In my own field of industrial and machine controls, I am seeing not only the available processing power ballooning, I am also seeing a seismic change in the underlying programming methodologies. The idea that a machine controller had to be deterministically programmed for every conceivable circumstance is being replaced by neural networks, artificial intelligence, and non-deterministic machine learning, some in the Cloud, some at the Edge. Much of my professional expertise is being rendered obsolete. I am having trouble dragging my skills into the 2020s, and I must acknowledge being somewhat out of touch with the needs and interests of younger tech geeks. Conceivably the air conditioner of the future will learn how to make the optimum settings for its owner’s lifestyle and desires, having left the factory with only a “basic education”, and learn the rest on the job. (Fun fact: that’s already happening! https://support.google.com/googlenest/answer/9247510?hl=en).

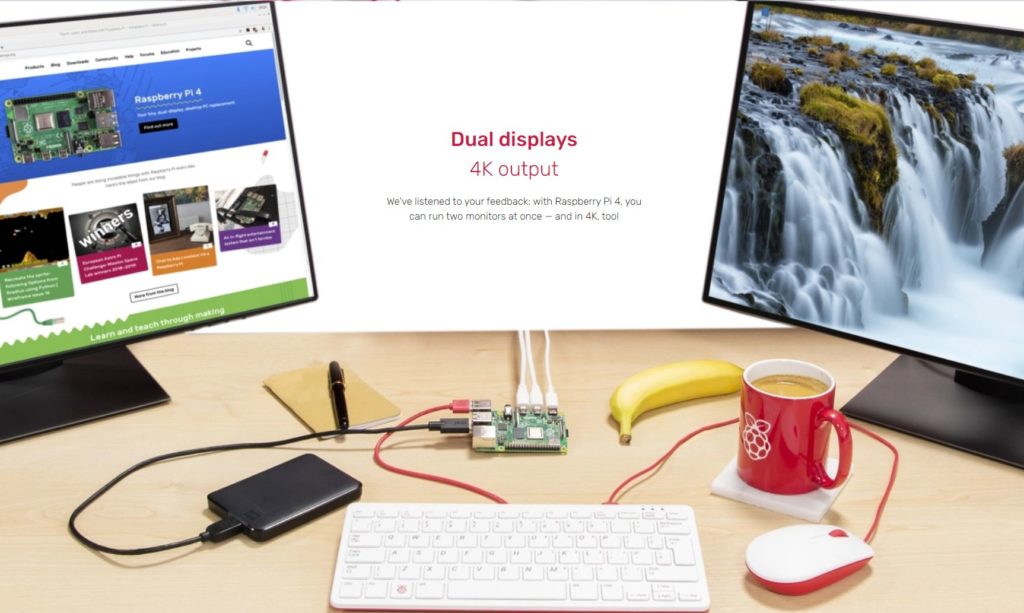

In the hobby spaces, the modern day tech geek is using tiny products that for $10 provide built-in WiFi and Bluetooth, megabytes of memory and roughly 200x the speed of an original IBM PC. For $5 he can get something more modest, but still sufficient to control a smart robot car made on his 3D printer. And for under $100 he can buy a computer board capable of running a full operating system and driving two large displays.

What this means to me is that in the hobby space, just as in the wider world, computing has become a component embedded in other products, other interests, other hobbies.

The tech geeks of 1984 were focused on the desktop. Today’s tech geeks are much more about embedding computing power in their other hobby products. What will tomorrow’s tech geeks be seeking?

Footnote: I applied to the Guardian for permission to use their 2011 obituary of Daniel McCracken, author of the Fortran textbook I had, and of many other language books. The Guardian wanted £200 for the rights. Links are free … https://www.theguardian.com/technology/2011/aug/29/daniel-mccracken-obituary

It’s an interesting read, he was quite a guy.